News

A new AI tool against online hate

[06.02.2024]A better and safer Internet - that is the goal of Safer Internet Day on February 6. A project by computational linguist Prof. Dr. Torsten Zesch fits in with this: with his help, a new AI tool was developed that recognizes criminal hate messages from social media posts. The tool is intended to facilitate the work of law enforcement authorities. The aim is to detect crimes much more quickly and thus combat crime more effectively.

Photo: Hendrik Schipper

Photo: Hendrik Schipper

"Use of AI for the early detection of criminal offences (KISTRA)" was the name of the BMBF-funded project, in which researchers from six universities worked together with the Federal Criminal Police Office (BKA) and the Central Office for Information Technology in the Security Sector (ZITiS), among others. "For us, the focus was on linguistic interests," explains Torsten Zesch, who holds the Chair of Computational Linguistics at the CATALPA research center. The challenge: the AI not only has to recognize hate posts, but also assign them to various criminal offenses, such as insults, threats or incitement to hatred. To this end, Torsten Zesch and his team worked closely with legal experts, including Prof. Dr. Frederike Zufall from the Karlsruhe Institute of Technology (KIT). They broke down the criminal offenses into individual interlinked building blocks, which Zesch was able to "translate" into a recognition algorithm. "That was actually a good fit, because laws are structured according to a similar logic to computer programs," says Zesch.

Reliable results despite perturbations

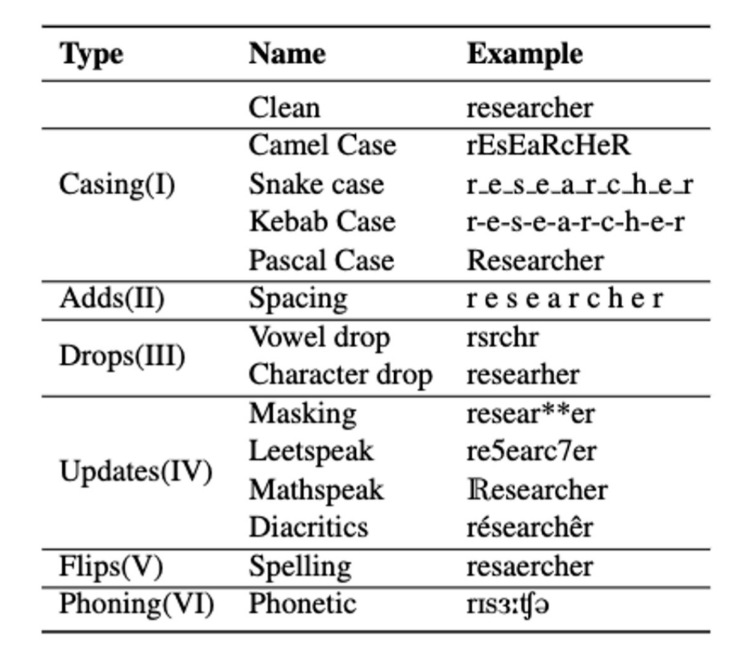

Much more difficult: achieving reliable results even if, for example, the spelling in a hate post is incorrect or obfuscations are used. "This is often done deliberately, presumably to avoid automated recognition," explains Zesch. For example, letters are omitted and replaced with numbers or special characters. In computational linguistics, these perturbations are referred to as "noise". Torsten Zesch and his research assistant Piush Aggarwal identified six different obfuscation strategies, some with sub-categories. (Image: see below) They found methods to deal with these strategies and still achieve stable results. "Robustness" is the technical term for this.

From hate speech to exam assessment

Illustration: CATALPA

Illustration: CATALPA

These methods from KISTRA also help the computational linguist in his central research at CATALPA. The focus here is on the language of learners. For example, he is currently working on using AI to evaluate free text answers in exams. "Robustness" is also a key objective here. In most university exams, for example, spelling mistakes should not play a role in the assessment. "Learners make such mistakes unconsciously and for very different reasons," explains Zesch. "For example, because German is not their native language, or they are simply careless mistakes." In this case, the AI still has to recognize the correct word. This is already working quite well: an initial preliminary study with bachelor exams in psychology was very promising.

Another challenge for "robust" results: learners' handwriting. Handwriting can be more or less scrawly, for example. "Handwriting also differs depending on the cultural background," says Zesch. "In the American-speaking world, for example, the numbers 1 and 7 are written very differently than in German, yet both must be assessed equally by the AI in an exam." The methods used for this are similar to those used in the hate speech project.

Decisions remain in the hands of humans

And there is another parallel: In both cases, the AI is merely intended to make humans' work easier. This was clearly required in the case of KISTRA: "The decision on criminal liability still lies with the investigating officers," explains Zesch. And the final assessment results should also remain in human hands, with the AI merely serving as an aid.

AI in security research

The KISTRA project was part of the German government's "Research for Civil Security" framework program in the "Artificial Intelligence in Security Research" funding guideline. The fourth phase of the framework program (German) has been running since the beginning of 2024.