- FernUni

- Faculty of Mathematics and Computer Science

- Human-Computer Interaction

- Research

- 1_InteractSys

- Alternative Communication for ...

Alternative Communication for Speech and Mobility Impaired Persons

Simone Eidam, Jens Garstka, Gabriele Peters

Publications:

- Jens Garstka, Simone Eidam, and Gabriele Peters,

A Virtual Presence System Design with Indoor Navigation Capabilities for Patients with Locked-In Syndrome, International Journal on Advances in Life Sciences, Vol. 8 (3/4), pp. 175-183, 2016. - Simone Eidam, Jens Garstka, and Gabriele Peters,

Towards Regaining Mobility Through Virtual Presence for Patients with Locked-in Syndrome, 8th International Conference on Advanced Cognitive Technologies and Applications (COGNITIVE 2016), Rome, Italy, March 20-24, 2016.

► best paper award - Simone Eidam,

Erkennung von Augengesten zur Sonifikation von Bildinhalten - Prototyp eines Basiskommunikationssystems für sprach- und bewegungseingeschränkte Personen, Bachelor Thesis in Computer Science at the Faculty of Mathematics and Computer Science, University of Hagen, 2015.

All publications are available from Publications.

Summary

We developed a prototype for an alternative communication system for speech and mobility impaired persons. It enables patients to select objects and to utter their needs to caregivers via an eye tracking device. Scenes such as a table with different objects, for example a book, a plate, or a TV set, are displayed on a screen, and the patients can use eye gaze and eye gestures to communicate with their environment via the screen. The prototype provides the possibility to deliver notifications to caregivers via a text-to-speech module. For example, an eye-gesture based interaction with a cup of tea displayed on the screen could trigger the message "I am thirsty". But also direct interactions with the environment are possible, such as switching the light on or off by gazing at a remote control or a light switch.

Existing eye-gesture based approaches to alternative communication for those with impairments are usually based on interactions with static screen content such as displayed 2D keyboards or pictograms, which lacks intuitiveness and quickly becomes tedious. Thus, the proposed approach differs from existing approaches in that it provides a faster, less complex, less error-prone, and more intuitive method for an alternative communication.

Speech and Mobility Impaired Persons

For persons with severe constraints of mobility and speech augmented and alternative communication systems represent an important technology that can provide impaired persons with improved communication possibilities, better prospects of interaction with the environment, and thus a substantial improvement of their quality of life.

Among those who profit most from communication devices based on eye gestures are patients suffering from amyotrophic lateral sclerosis (ALS), a disorder that, inter alia, involves a gradual degradation of muscular strength and spaeking abilities, as well as patients with locked-in syndrome. The most severe type of this syndrome is accompanied by a complete paralysis of almost all voluntary muscles. This implies the inability to speak. Mostly, these patiens are able to execute eye blinks and at least vertical eye movements, and usually they are fully conscious and able to hear.

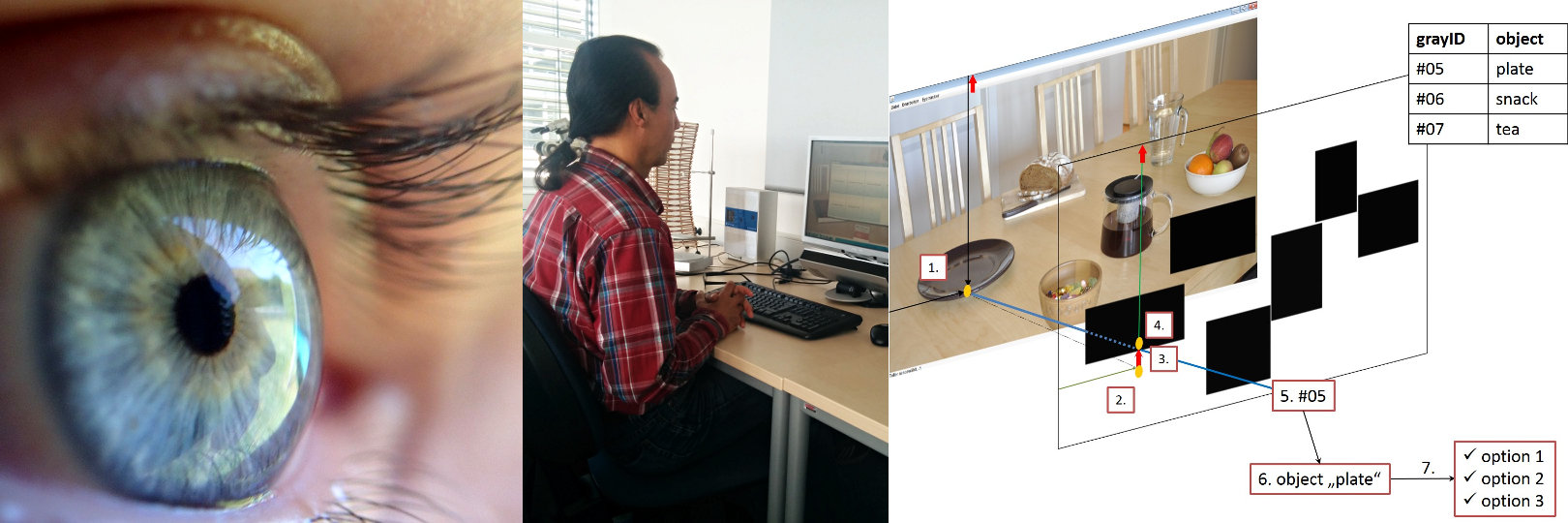

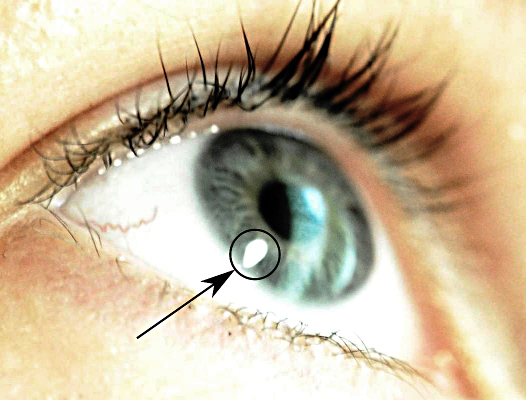

Eye Tracking

The prototyp employs the method of dark-pupil tracking, where the point of regard, i.e. the point a patient fixates, is determined by a camera-based recognition of both, the position of the pupil center and the position of reflections on the cornea from an additional infrared light source.

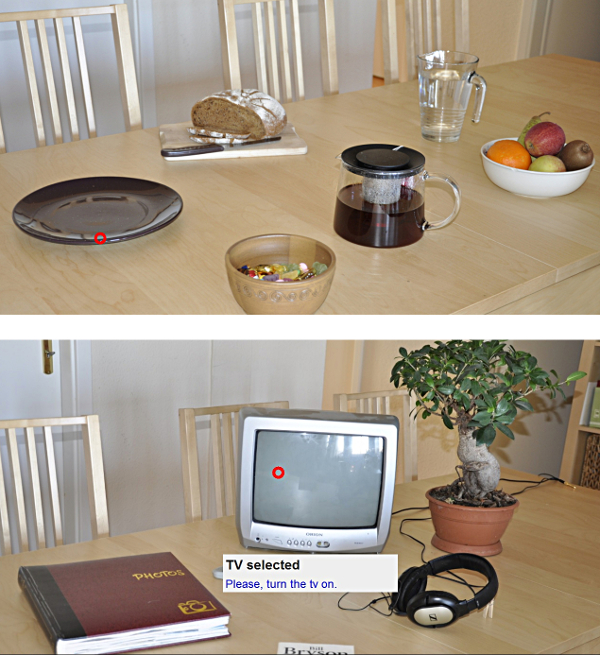

Sample Interaction

The impaired person sees an image of a scene with typical everyday objects such as drinks, plates, fruit, etc. These images are representatives for real scenes captured by a camera directly from the patient's environment and analyzed by an object recognition framework in future work. The plant, for example, can be fixated by a patient to let a caregiver know about the desire to go to the garden; the TV set can be used to express the desire to switch it on or to start a TV application on the internet, etc. The red circles shown in the sample scenes illustrate the points of regard calculated by the eye tracking module. The visual feedback for the patient given by the circle can be activated or deactivated depending on individual preferences.

An object is selected by gazing on it for a predetermined period of time. With a successful selection a set of options is displayed on the screen. With an eye blink the patient chooses one of these options. Afterwards she or he receives visual feedback on the screen regarding the selected option, and depending on the selection either a direct action is triggered (such as switching on the light) or an audio signal of a corresponding text can be synthesized (such as "I would like to go to the garden"). Thus, object selection is realized through fixation while option selection is done by blinking. Interaction by blinking allows the patient to rest the eyes during the display of the option panel, giving the chance to scan calmly over the offered options in order to get an overview.

Components of the Prototype Test Scenario

Area 1: Components used to test different scenes with different objects, i.e., the screen and the eye tracking device. Area 2: Stationary eye tracking unit below the screen. Area 3: Eye tracking workstation. Area 4: Eye tracking control software. Area 5: Table lamp, which can be turned on and off directly via eye gestures on the displayed scene.

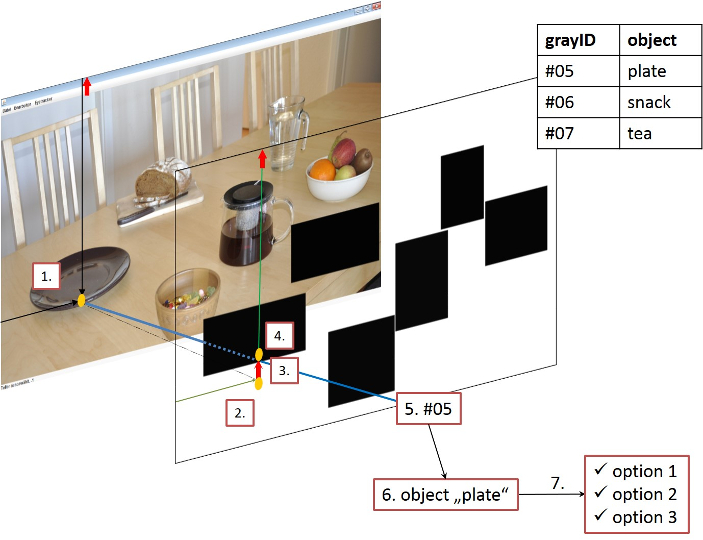

Method

In the prototype the object recognition module is simulated only. In future work it is to be replaced by an automatic object recogntition approach, for example, with the aid of deep convolutional neural networks, which considerably improved the performance of object recognition. The simulated object recognition is based on a gray-scale mask for the scene image, which assigns a unique gray value (grayID) to each of the available objects. The coordinates that correspond to a point of regard (1.) refer to the screen, not to a potentially smaller scene image. Thus, these raw coordinates are corrected by an offset (2., 3.). The corrected values correspond to a pixel (4.) in the mask whose value (5.) may belong to one of the objects shown. In the illustrated example this pixel has the grayID "#05" which corresponds to the object "plate" (6.). Finally, either all available options will be displayed (7.) or nothing will happen in case the coordinates do not refer to any object.

Results

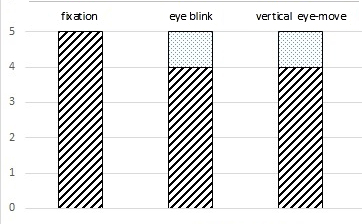

The prototype has been evaluated with 5 non-impaired persons to analyze its basic usability. The diagram shows the number of subjects that accomplished a specific task successfully at the first attempt. None of the subjects had any problems with the fixation. During option selection via eye blink one subject required several trials. The same applies to the task of vertical eye movements. The functionality of the prototype has been reported as beeing stable and accurate. With a well-calibrated eye tracker the basic handling consisting of the combination of fixation and eye blink is perceived as beeing comfortable. Additionally, it is possible to adjust the eye gesture settings individually at any time. This enables an impaired person to achieve optimal eye gesture recognition results and a reliable handling.

Vision of the Future

To augment this prototyp it has to be equiped with an object recognition module that can recognize a reasonable number of objects for everyday use. Then a live view of the dynamically changing environment of a patient can be displayed on the screen, providing the possibility to interact with objects in the real environment. This may enhance the feeling of direct participation in the environment for severely speech and mobility impaired persons.

- Downloads:

- Research Report 2010-2013 (pdf)

- Forschungsbericht 2010-2013 (pdf)

- Lehrbericht 2010-2022 (pdf)

- Legal notice

- © FernUniversität in Hagen

FernUniversität in Hagen, Faculty of Mathematics und Computer Science, Human-Computer Interaction, 58084 Hagen

FernUniversität in Hagen, Faculty of Mathematics und Computer Science, Human-Computer Interaction, 58084 Hagen